Executive Summary

The Physical Renaissance: Why Now?

Section titled “The Physical Renaissance: Why Now?”For decades, we’ve predicted the rise of the machines. We’ve seen them in cinema and read about them in sci-fi, but for the first time in history, the friction between “digital thought” and “physical action” is finally disappearing. We aren’t just building better tools; we are witnessing a new species of economy

Artificial intelligence is now very good at understanding the world in software. Large models can read, write, see, and reason over huge amounts of digital information.

Robotics is the other half of that story: machines that can move, sense, and affect the physical world using motors, joints, cameras, LiDAR, microphones, and other sensors. Where AI “thinks,” robots “act.”

Today, these two worlds are still mostly separate:

- AI systems work in a digital environment (text, images, APIs).

- Robots work in the physical environment (objects, people, terrain).

- There is no common layer where robots can easily share what they learn from experience the way models share weights or datasets.

From Digital-Only AI to Embodied Intelligence

Section titled “From Digital-Only AI to Embodied Intelligence”Over the last decade, foundation models (GPT, Claude, Gemini, etc.) proved that when you give a system huge amounts of data and a consistent architecture, it can generalize knowledge: learn once, reuse many times.

Robots need something similar, but for embodied experience:

- Understanding not just “what is a cup,” but how heavy it is, how slippery it feels, and how best to grasp it.

- Understanding not just an image of a warehouse, but how to navigate it safely, avoid people, and adapt when shelves move.

- Understanding not just a route on a map, but how to control motors and wheels under wind, friction, or uneven terrain.

This is what we mean by embodied AI: intelligence that doesn’t stop at prediction, but continues all the way to real-world action.

The Collective Intelligence Bottleneck

Section titled “The Collective Intelligence Bottleneck”Despite rapid advances in hardware and foundation models, the growth of the robotics industry is fundamentally constrained by a single bottleneck: collective intelligence. In the current landscape, every robot learns in isolation.

A warehouse arm may discover a more efficient grasp or a drone may identify a safer flight path, yet these improvements remain locked within proprietary codebases and hardware-specific stacks.

This “Robot Island” problem forces teams to repeatedly rebuild the same foundations from scratch, spending 70% of their time on maintenance and integration rather than advancing intelligence

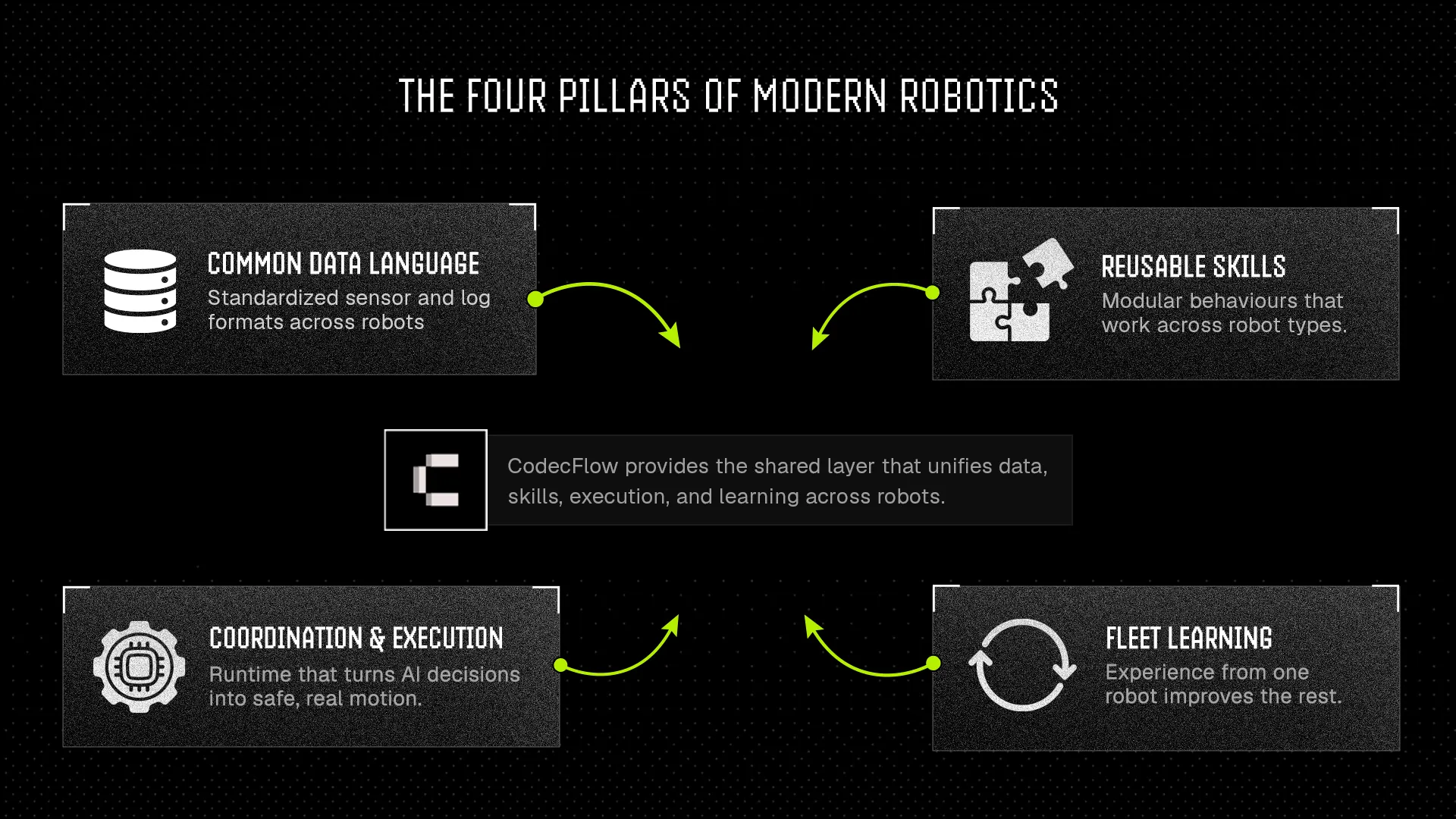

CodecFlow: A Shared Intelligence Layer for Robots

Section titled “CodecFlow: A Shared Intelligence Layer for Robots”CodecFlow provides the missing link: a unified standard for perception, reasoning, and action. It functions as a shared intelligence layer that decouples a robot’s capability from its physical chassis. By standardizing how machines see the world and execute tasks, CodecFlow allows teams to build once and deploy everywhere.

- Unified Data: Normalizes disparate sensor inputs into a common format, allowing data to be shared and reused across tasks and teams.

- Modular Operators: Packages complex behaviors, like grasping or navigation, into hardware-agnostic “apps” that can be composed and upgraded without rewriting the underlying system.

- Compounding Intelligence: Creates a feedback loop where real-world experience from a single machine propagates across the entire network, ensuring that robotic knowledge compounds over time rather than resetting with every new deployment.

Robots should not need to be rebuilt from scratch to do meaningful work.

CodecFlow lets teams build robotic intelligence once and run it across many machines, so perception, decision-making, and control can move between robots instead of being locked to a single platform.

Instead of fragmented, hardware-specific stacks, CodecFlow provides a shared intelligence layer where robots can see, reason, and act through a common structure.

Skills become reusable. Improvements propagate across fleets. Intelligence compounds over time.

This is how teams move faster, deploy with confidence, and scale robotics systems without rebuilding the fundamentals for every new machine.

Who CodecFlow is For

Section titled “Who CodecFlow is For”CodecFlow is built for people turning robotics into real-world systems:

- Engineers shipping robots into production

- AI developers building embodied agents

- Teams operating and scaling robot fleets

- Researchers experimenting with new behaviors and models

- Builders who want to publish, share, or monetize robotic capabilities

If you need robotics intelligence that travels across machines instead of starting over every time, CodecFlow is designed for you.

What You Can Build With CodecFlow

Section titled “What You Can Build With CodecFlow”CodecFlow is designed around how robotics teams actually work: connecting sensing, reasoning, and execution into one coherent system.

With CodecFlow, you can:

- Unify sensor inputs

- Turn perception into structure

- Connect goals to actions

- Run reliable control across hardware

- Let systems improve themselves

CodecFlow turns disconnected components into a living, shared intelligence system that grows more capable the more it is used.

The Machine Economy

Section titled “The Machine Economy”As billions of machines come online, the value of robotics is shifting away from the physical construction of machines toward the Coordination Layer that connects them.

CodecFlow serves as the foundational infrastructure for this new Machine Economy, where economic value is driven by the capabilities robots provide, the coordination between them, and the collective intelligence that guides their actions. We are moving beyond the era of isolated hardware projects and into a future of networked agency.