How CodecFlow Works

The Intelligence Pipeline: Engineering Physical Autonomy

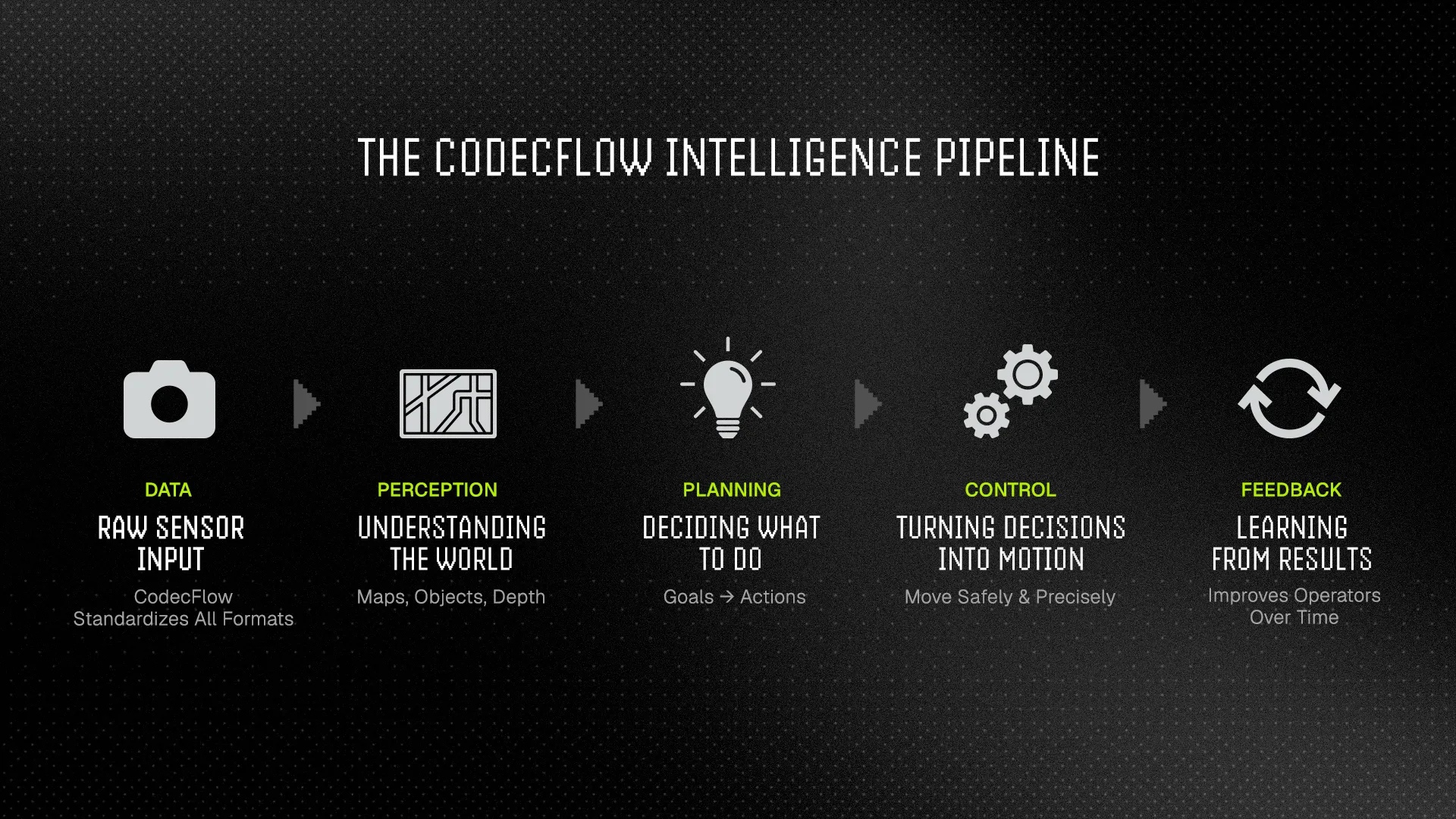

Section titled “The Intelligence Pipeline: Engineering Physical Autonomy”To the casual observer, a robot moving through a crowded room looks like a single act. In reality, it is a symphony of thousands of micro-decisions happening in milliseconds.

CodecFlow is the conductor of this symphony, transforming chaotic environmental noise into purposeful, elegant action. This is the pipeline that turns a machine into an agent.

1. The Sensory Foundation: The Unified Data Layer

Section titled “1. The Sensory Foundation: The Unified Data Layer”(“Teaching every machine to speak the same language”)

Robots are inherently multilingual and not in a good way. Developers typically spend 70% of their time writing “glue code” just to get incompatible sensors (LiDAR, RGB, Encoders) to talk to one another.

CodecFlow replaces this friction with a Unified Data Schema. By wrapping every input into a standardized “manifest”, complete with timestamps and spatial metadata, data becomes liquid. It flows effortlessly between hardware, simulations, and the cloud, allowing a perception model trained on one robot to be instantly applied to another.

2. The Spatial Mind: Environmental Understanding

Section titled “2. The Spatial Mind: Environmental Understanding”(“World building in real time”)

Standardizing data is only the first step; a robot must understand its surroundings. CodecFlow’s World Engine provides native APIs that handle the heavy lifting of sensor fusion and 3D mapping.

Instead of building complex SLAM pipelines from scratch, developers use simple calls to identify objects, surfaces, and grasp points. This treats perception like a game engine treats graphics: you focus on the “gameplay” (the robot’s task) while CodecFlow handles the underlying complexity of 3D occupancy and obstacle detection.

3. The Reasoning Core: Vision-Language-Action (VLA)

Section titled “3. The Reasoning Core: Vision-Language-Action (VLA)”(“Bridging the gap between intent and movement”)

Knowing the world isn’t enough. Robots must decide what to do.

Most AI can see (Vision) and talk (Language), but they remain “ghosts in the machine” with no way to move. CodecFlow provides the Action (A). We utilize a dual-system model that mimics human cognition:

- System 1 (The Intuition): The reactive layer. It handles millisecond-level adjustments—balancing on ice or dodging a sudden pedestrian.

- System 2 (The Architect): The deliberative layer. It handles high-level strategy—mapping the most efficient path through a warehouse or sequencing the steps to clean a kitchen.

The Operator Runtime (OPTR) acts as the compiler, merging these two systems into safe, executable behaviors.

4. Physical Agency: Adaptive Control & Actuation

Section titled “4. Physical Agency: Adaptive Control & Actuation”(“The Nervous System of the Machine”)

A plan is only as good as the robot’s ability to execute it. Traditional control systems are brittle; a slight change in floor friction or wind can cause a robot to fail.

CodecFlow’s control layer is hardware-agnostic and adaptive.

Whether the machine is hydraulic, electric, or pneumatic, our closed-loop system compares the intended motion with the actual motion and corrects it in real-time.

- Environmental Adaptation: The system automatically adjusts for payload variations or uneven terrain.

- Cross-Platform: The same Navigation Operator can guide a quadraped or a humanoid, provided the hardware profile is mapped.

5. Compounding Wisdom: The Learning Flywheel

Section titled “5. Compounding Wisdom: The Learning Flywheel”(“The Collective Memory of the Network”)

In the CodecFlow ecosystem, failure is a gift. Every time a robot encounters a new challenge, a slippery surface or an unrecognized object that experience is captured. This creates a powerful Network Effect: when one robot learns a better way to navigate a specific environment, every other machine using that same Operator receives an “over-the-air” intelligence upgrade. We are shifting from isolated engineering to ecosystem-level evolution.

By unifying these five stages, we move away from the ‘one-off’ nature of robotics and toward a world of compounding intelligence.